History of Artificial Intelligence: From Concept to Reality

Explore the history of Artificial Intelligence, tracing its journey from early concepts to today’s cutting-edge applications. Discover the evolution of artificial intelligence, the development of AI technology, and the historical milestones in AI research that transformed the world.

The story of Artificial Intelligence (AI) is one of the most fascinating technological journeys in human history. From philosophical musings about intelligent machines to the integration of AI into our daily lives, the evolution of this field reflects a remarkable blend of ambition, creativity, and perseverance. This article explores the origins, key milestones, challenges, and promising future of AI development.

Philosophical Foundations: Paving the Way for AI

The roots of Artificial Intelligence trace back centuries before computers existed. Ancient Greek philosophers like Aristotle introduced logic systems, while later thinkers, such as René Descartes, explored the mechanics of human thought and reasoning.

These early ideas created a foundation for the eventual pursuit of machines capable of replicating human intelligence. The Renaissance and Enlightenment periods further expanded on these concepts, fostering scientific curiosity that would fuel the next stage of AI.

Fig: classical painting of philosophers debating logic and intelligence in a grand (Generated Art)

The Theoretical Foundations: Alan Turing’s Vision

An important turning point in the evolution of computational theories occurred in the 20th century. Alan Turing, often regarded as the father of Artificial Intelligence, revolutionized the field with his concept of a “universal machine” capable of performing any computation. The Turing Test, which evaluates a machine’s capacity to display intelligent behavior that is indistinguishable from human conduct, was proposed in his 1950 paper “Computing Machinery and Intelligence.”

Turing’s groundbreaking work laid the theoretical groundwork for machine learning history, sparking widespread interest in the idea of intelligent machines.

Fig: A monochrome illustration of Alan Turing in a vintage laboratory (Generated Art)

The Dartmouth Conference: Birth of Artificial Intelligence

The field of Artificial Intelligence formally began in 1956 during the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This event not only introduced the term “Artificial Intelligence” but also outlined ambitious goals to simulate aspects of human intelligence, such as problem-solving and reasoning.

While the initial optimism led to significant funding and research, it also set expectations that were difficult to meet with the limited technology of the time

Symbolic AI and Early Successes

The late 1950s and 1960s saw the development of symbolic AI, which relied on logical symbols to represent problems and solutions. Programs like the General Problem Solver (GPS) and ELIZA, an early chatbot, demonstrated the potential of AI to emulate human reasoning and conversation.

Despite these successes, symbolic AI systems were rigid and struggled with tasks requiring adaptability or learning, highlighting the limitations of early approaches.

Fig: A retro computer terminal from the 1960s or 1970s displaying a conversation with ELIZA(Generated Art)

Fig: A stark and cold laboratory filled with defunct computers and a symbolic ‘frozen’ robot.(Generated Art)

The AI Winter: A Period of Disillusionment

The initial excitement surrounding AI research began to fade by the 1970s. Overambitious claims, coupled with limited computational power and high costs, led to widespread skepticism. Funding dried up, and the field entered a period known as the “AI Winter.”

However, during this time, researchers began to explore alternative approaches, such as neural networks, that would later prove instrumental in advancing the field.

Expert Systems and the Revival of AI

The 1980s brought a resurgence of interest in AI with the development of expert systems. These programs used rule-based logic to replicate human expertise in specific fields, such as medical diagnosis or engineering design. Examples like MYCIN and XCON demonstrated the practical applications of AI in real-world scenarios.

This period also saw the growth of AI development in industrial applications, paving the way for further advancements in the coming decades.

Fig: Consultation between a doctor and a highly advanced computer system(Generated Art)

Fig: Deep Blue playing chess against Garry Kasparov(Generated Art)

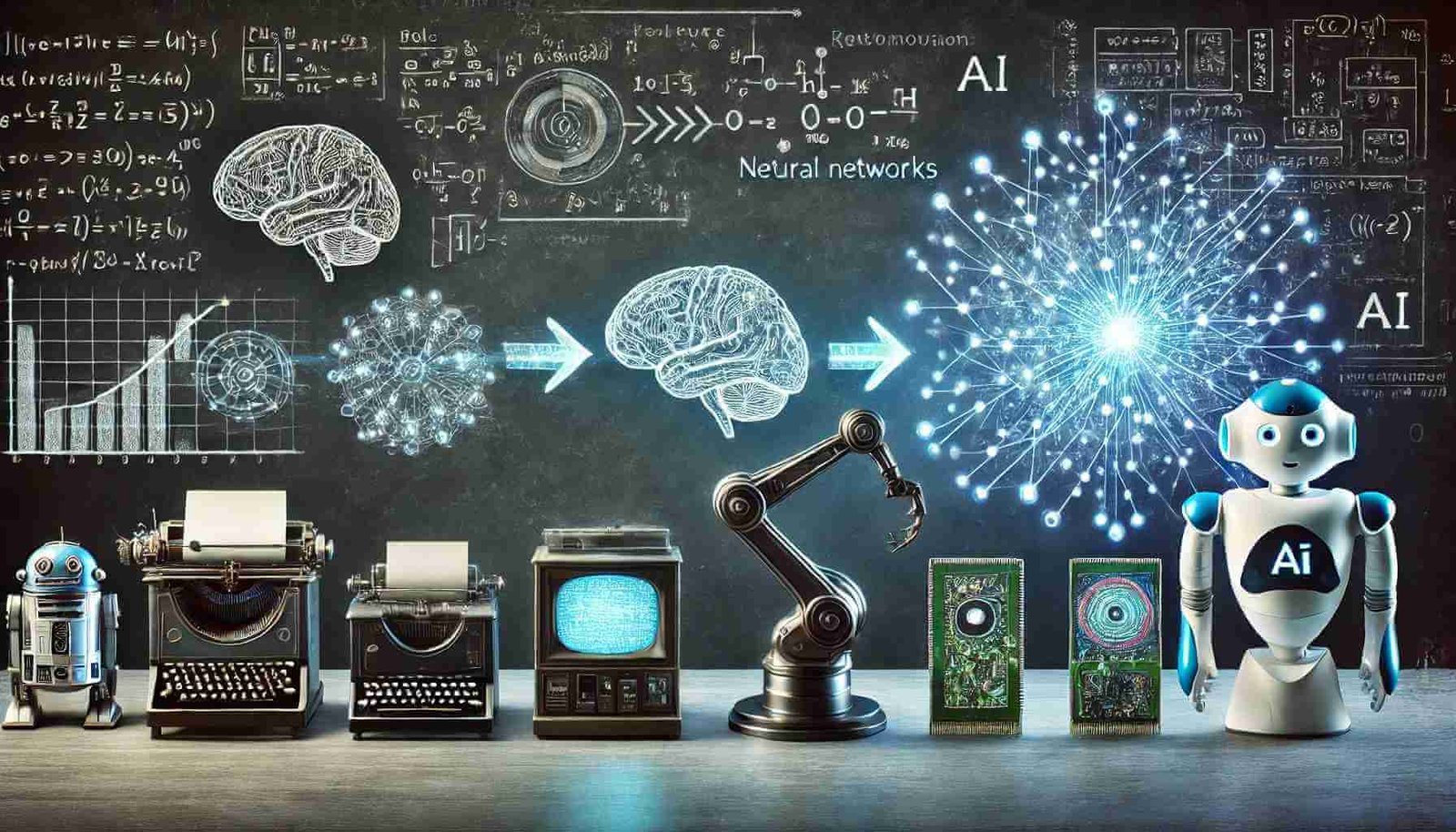

The Shift to Machine Learning

By the 1990s, the focus of Artificial Intelligence research shifted from symbolic approaches to machine learning history, where systems could learn and adapt from data. This shift marked a significant milestone in the evolution of artificial intelligence, enabling breakthroughs in pattern recognition and predictive analytics.

A landmark achievement came in 1997 when IBM’s Deep Blue defeated chess champion Garry Kasparov, showcasing the potential of AI in strategic thinking.

The Era of Deep Learning

Deep learning, a branch of AI development driven by artificial neural networks, gained popularity in the 2010s. These networks mimicked the structure of the human brain, allowing machines to process and interpret complex data such as images, text, and speech. Deep learning fueled significant advancements across industries:

- Self-driving cars began navigating real-world environments.

- AI systems achieved human-level performance in tasks like image recognition and language processing.

- AlphaGo’s victory over a Go world champion in 2016 demonstrated the power of reinforcement learning.

AI in Everyday Life

Today, Artificial Intelligence is ubiquitous in modern life. Chatbots and recommendation systems improve consumer interactions, personalize content on streaming services like Netflix, and power virtual assistants like Alexa and Siri. AI’s role extends beyond convenience. In healthcare, it aids in diagnosing diseases and developing treatment plans. It simplifies risk assessment and fraud detection in the financial industry.

Despite its successes, AI faces significant challenges. Ethical concerns, such as algorithmic bias and data privacy, remain critical issues. Policymakers and researchers must address these concerns while ensuring innovation thrives.Looking ahead, the development of AI technology is poised to achieve even greater milestones:

- General AI, capable of performing a wide range of tasks, remains a long-term goal.

- AI-integrated quantum computing promises unprecedented computational power.

- Collaborative AI systems aim to enhance human productivity and creativity.

As the field evolves, the evolution of artificial intelligence will continue to shape industries, redefine possibilities, and push the boundaries of human imagination.